In this issue:

Introductions

Physicians aren't afraid of AI; they're afraid of losing control

The first real RCTs on AI scribes – they save time, but not as much as you’d think

Patients are bypassing doctors and going straight to AI

Quick hits

Introductions

Welcome to the first edition of Prompted Care, a newsletter written for physicians and the curious. I'm Ankit Gordhandas, co-founder of Pear, and I started this because the AI conversation in healthcare is moving fast and most of what's written about it is either hype or press releases. This newsletter is my attempt to cut through that: what's actually working, what the evidence says, and what it means for the people delivering care. Let's get into it.

Physicians aren't afraid of AI—they're afraid of losing control

A new survey of 1,000+ physicians across 106 specialties just dropped, and the headline isn't what you'd expect.

Offcall, a physician salary transparency platform, released their 2025 Physicians AI Report this week. The findings challenge the dominant narrative that doctors are resistant to AI.

They're not. They're already using it—67% daily. And 84% say it makes them better at their jobs.

So why are 81% dissatisfied with how AI is being deployed at their organizations?

Because they have almost no say in it. 71% report having little to no influence on which AI tools get adopted. Nearly half say their employer's communication about AI is poor. And 89% believe they should receive dedicated funding for AI tools—funding most aren't getting.

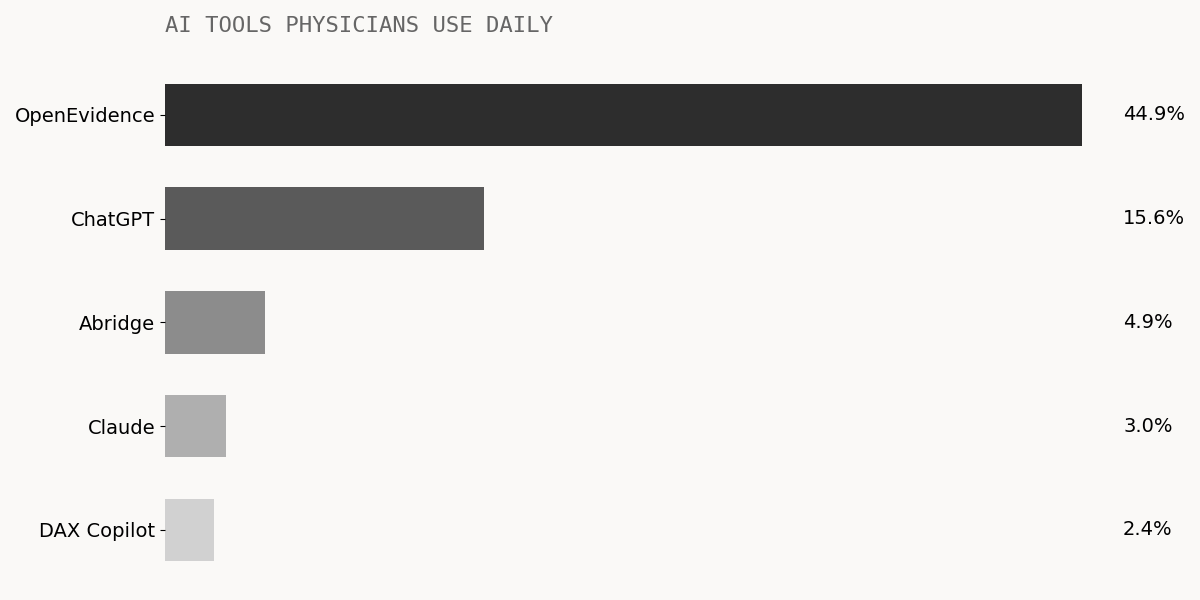

The tools physicians are actually choosing tell an interesting story:

What do they actually want AI to do? The #1 request: "Eliminate manual documentation completely." 65% prioritize documentation and administrative relief. Only 43% rank clinical decision support as their top need. However, OpenEvidence (a platform for medical information and ChatGPT form a vast majority of usage among doctors.

The disconnect suggests physicians are finding ways to solve their own problems while their organizations focus elsewhere.

"Despite physician productivity gains from AI tools, physicians will have a higher patient volume to care for with no proportional increase in compensation. The C-suite is incentivized to use AI as a cost-cutting strategy."

The optimistic read: 78% believe AI improves patient health. 87% don't think it will take their jobs. 42% say they're more likely to stay in medicine because of AI adoption.

The cautionary read: The most sophisticated AI is being deployed by payers and administrators, not physicians. And the people who take care of patients are watching it happen without them.

The first real RCTs on AI scribes – they save time, but not as much as you’d think

We finally have randomized controlled trial data on ambient AI scribes—and the results are promising, but more modest than the marketing.

Two major studies published this fall (and one more hit the pre-peer review shelf):

NEJM AI, November 2025 — 238 physicians across 14 specialties, 72,000+ patient encounters. Randomized to Nabla, DAX Copilot, or control.

Results: Nabla reduced documentation time by about 10%—roughly 41 seconds per note. DAX showed smaller, non-significant gains. Both showed modest improvements in burnout (~7%). The catch: physicians reported "occasional clinically significant inaccuracies."

JAMA Network Open, October 2025 — 263 physicians across 6 health systems using Abridge.

The NEJM AI editorial title says it all: "AI Scribes Are Not Productivity Tools (Yet)."

The realistic take: 41 seconds per note, across a full day of patients, might add up to 10-15 minutes. That's meaningful—but it's not the "hours back in your day" that vendor pitches promise. And you still need to review every note for accuracy.

The hopeful take: These are first-generation tools. The burnout improvements are real. And documentation is only one piece of the administrative burden puzzle.

A larger European study paints a more optimistic picture. Across 375,000 notes generated by 1,295 clinicians in Sweden's Capio health system, documentation time dropped 29%—from 6.69 to 4.72 minutes per note. Administrative stress decreased 30%, and clinicians reported feeling 16% more present with patients. The median time to review and edit AI-generated notes was 93 seconds, and this stayed stable over time—no learning curve needed.

Patients are bypassing doctors and going straight to AI

Here's a fascinating news story: A mother whose 4-year-old son saw 17 different doctors over three years with no diagnosis. After the latest inconclusive MRI, she fed the radiology report into ChatGPT line by line, including details like her son refusing to sit cross-legged. The AI suggested tethered cord syndrome. A neurosurgeon confirmed it. Surgery followed.

It's a compelling anecdote—and it's not isolated. About one in six American adults now use AI chatbots at least monthly for health information, according to a KFF poll. Among adults under 30, that jumps to 25%. Some are using it for general health questions. Others are attempting self-diagnosis.

The accuracy is mixed. A Stanford study found ChatGPT-4 alone scored 92 out of 100 on diagnostic reasoning—better than the physicians with or without AI access (who scored 74 and 76, respectively). But that's in controlled case scenarios. Real-world performance varies wildly. For common orthopedic conditions, ChatGPT's diagnostic accuracy ranged from inconsistent to occasionally correct, and it rarely urged patients to seek immediate medical attention when warranted.

For rare diseases, the picture changes. A recent study show GPT-4 can identify rare conditions within its top 10 differential diagnoses in challenging cases at rates comparable to specialists, particularly when provided with detailed symptom descriptions. Another study on neuromyelitis optica spectrum disorder found ChatGPT correctly diagnosed 91% of cases that had stumped physicians initially—and in some instances, reached the diagnosis 861 days faster than the eventual clinical diagnosis (and this was using GPT-3.5. As of writing of this article, the latest OpenAI model is GPT-5.2, which is a couple orders of magnitude more accurate than GPT-3.5).

AI self-diagnosis isn't replacing physician visits yet. But it's changing them. Patients are more informed—or think they are. The challenge for physicians is figuring out how to work with that, not against it.

Quick hits

FDA deploys agentic AI internally

As of December 1, all FDA employees have access to multi-step AI workflows for pre-market reviews, inspections, and compliance. Commissioner Makary: "Never been a better moment to modernize." (Read more)

Oracle's AI-first EHR is now ONC-certified

Built from scratch, voice-first, cloud-native. Ambulatory only for now—acute care coming 2026. Oracle has been losing market share (down to 23% vs Epic's 42%), and this is their bet on catching up. (Read more)

Up from ~950 a year ago. 77% are radiology tools. But a JAMA study found that 47% of FDA decision summaries don't describe the study design, and less than 1% report patient outcomes. (Read more)

Prompted Care is written by Ankit Gordhandas, co-founder of Pear, a company building AI for value-based care. This newsletter is editorially independent.

Have feedback? Reply to this email. Know someone who'd find this useful? Forward it along.